by Anna Szemiot

10 min read

by Anna Szemiot

10 min read

The founders of StreamX, Marta and Michał Cukierman, recently appeared on the Arbory Digital Experiences podcast to discuss their technical insights from the adaptTo() 2024.

They joined host Tad Reeves, principal architect at Arbory Digital, in a conversation on new AEM features and broader trends in the AEM ecosystem.

The episode, recorded right after their return from Berlin, focused on the latest developments in Adobe Experience Manager (AEM) they learned about during the conference.

Here are a few highlights from their hour-long discussion:

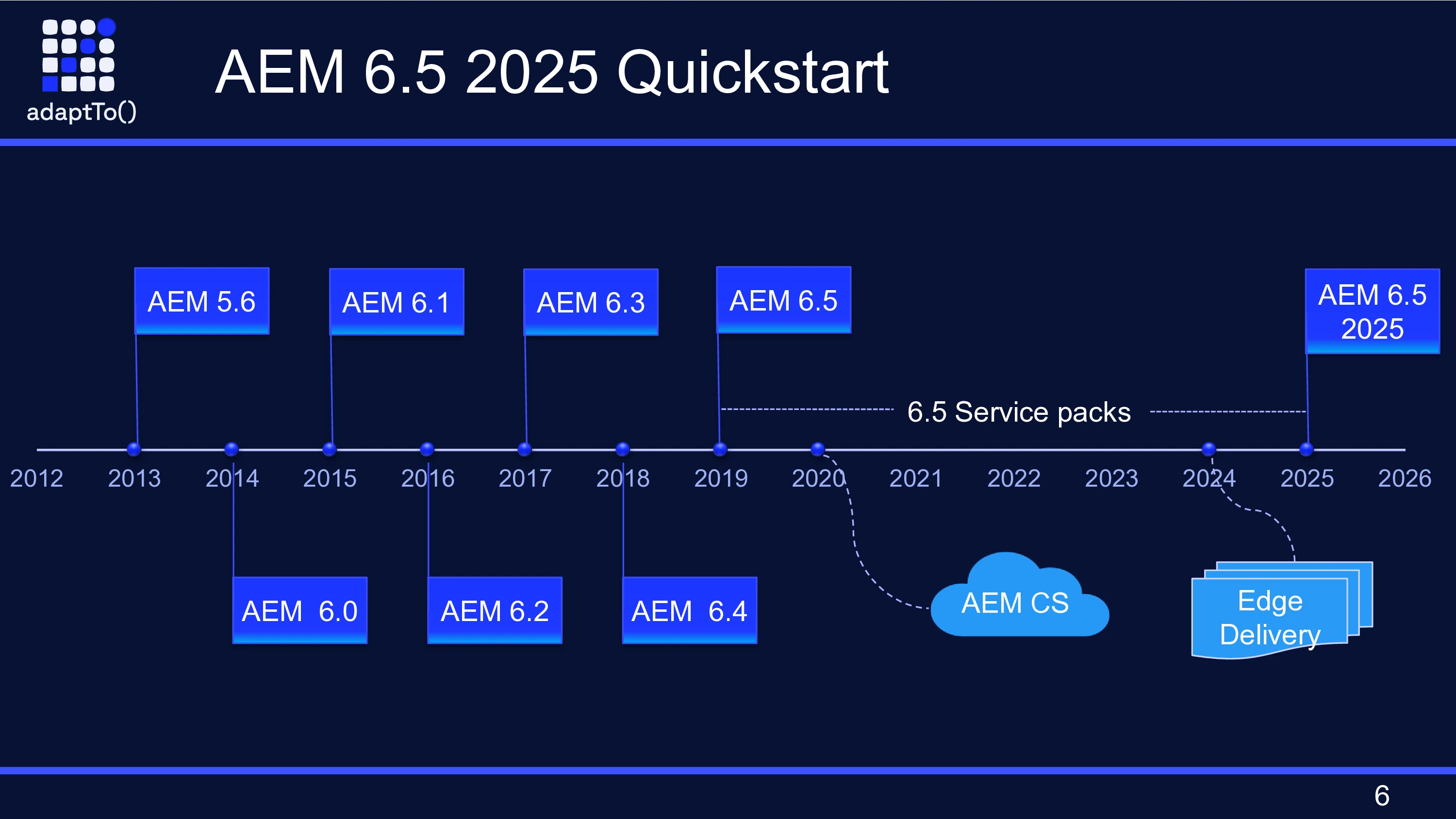

One of adaptTo()'s biggest announcements was the upcoming release of AEM 6.5 running on JDK 17. The internal codename "AEM 6.5 2025 Edition" might be a mouthful, but the implications are significant. This move is driven by the End Of Life of older Java versions and the need to stay ahead of security vulnerabilities. We can expect to see this new version roll out by late 2024 or early 2025.

As Tad Reeves pointed out, Adobe plans to eventually migrate Cloud Service to JDK 21, but this will be a gradual process to minimize disruption for customers. A new pattern detector will help identify potential issues when upgrading to the new version.

A beta program will be made available in December. Enroll here if you are interested in testing the new version.

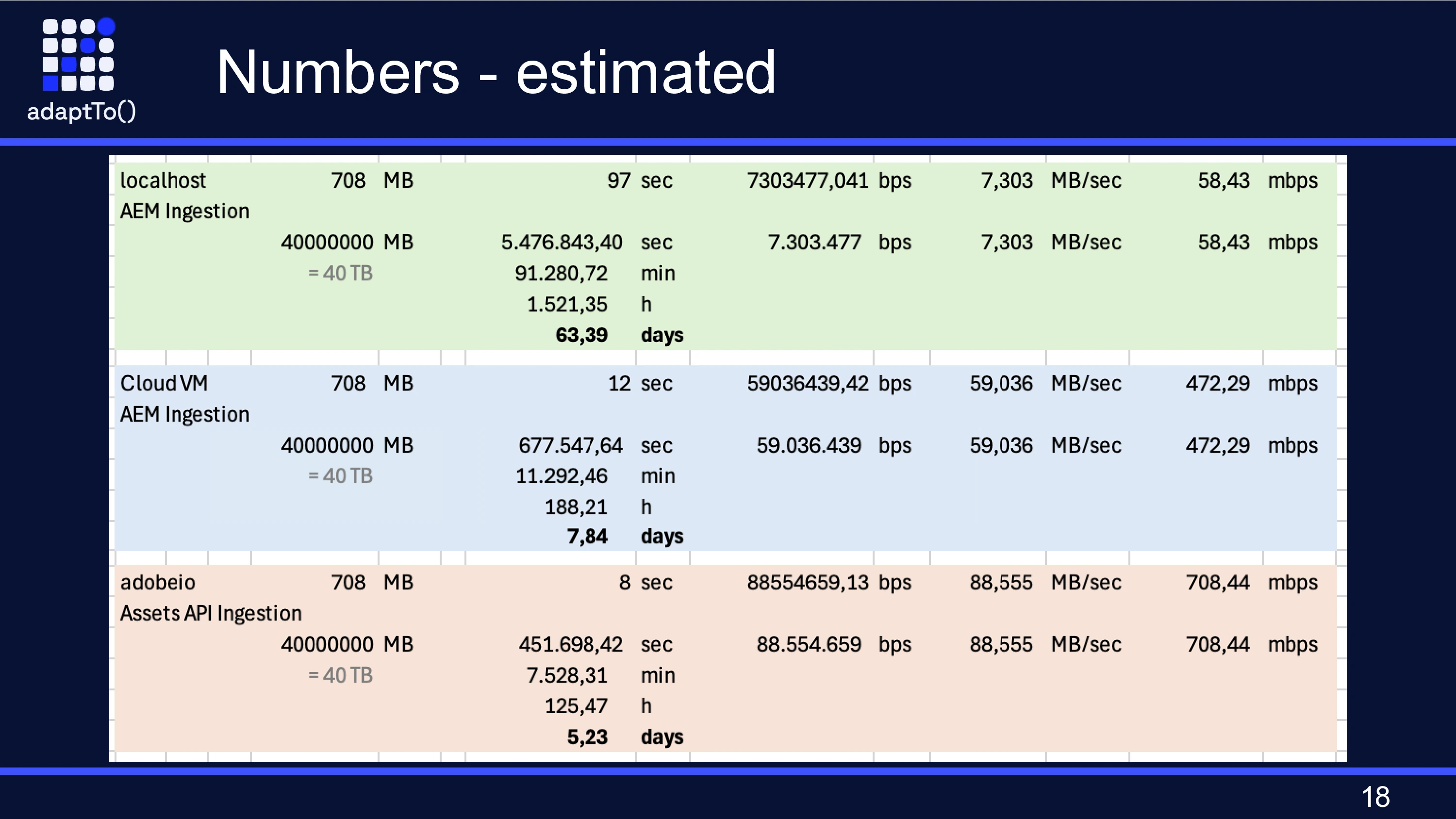

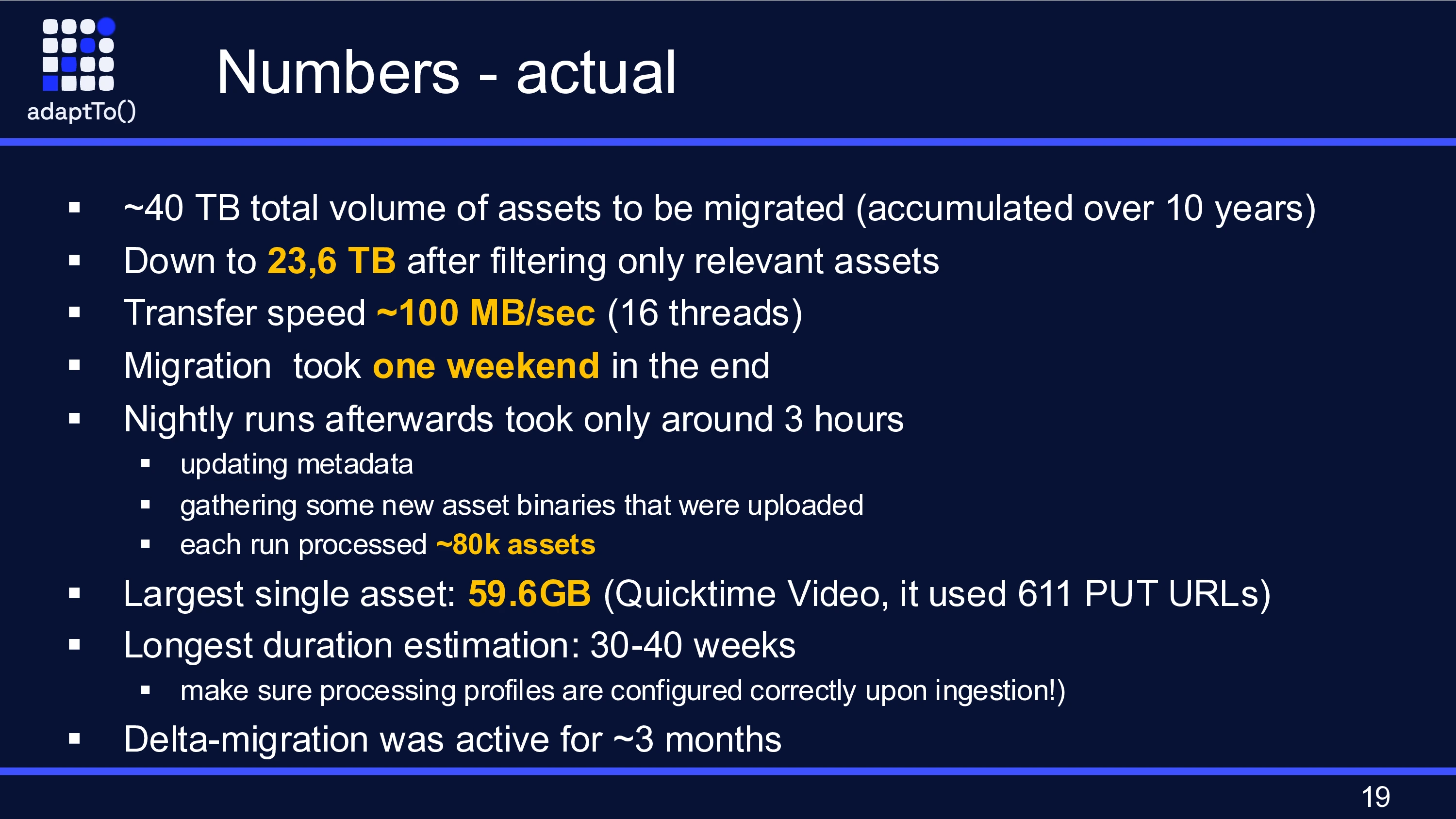

Georg Henzler's adaptTo() 2024 presentation detailed the move of a staggering 40TB of assets to AEM, offering a masterclass in problem-solving. The presentation highlighted the challenges of this migration, emphasizing the importance of data-driven decision-making and creative solutions.

He discussed different approaches considered, the calculations performed to estimate migration time, and the final solution that enabled migration over a weekend.

The key takeaways? Purpose-built platforms often become outdated faster than products, and migration code is inherently temporary. That's why continuous approach, moving data deltas while allowing users to continue using the old system, is crucial.

In the podcast discussion, Michał Cukierman elaborated on that, explaining how migration code’s temporary nature comes from the fact that migrations are typically one-time events, undertaken to transfer data from legacy systems to a new platform. Once the data has been successfully migrated, the code used to facilitate the transfer becomes obsolete.

In this case, the most pragmatic approach recognizes that the primary goal is to transfer the data effectively and within the required timeframe. When dealing with massive data migrations, the focus shifts to evaluating different migration strategies, running experiments, and extrapolating results to determine the most efficient way.

From StreamX's point of view, this raises the possibility of StreamX facilitating incremental migrations. StreamX can handle the continuous synchronization of data updates between the systems with migration code addressing solely the specific logics of each.

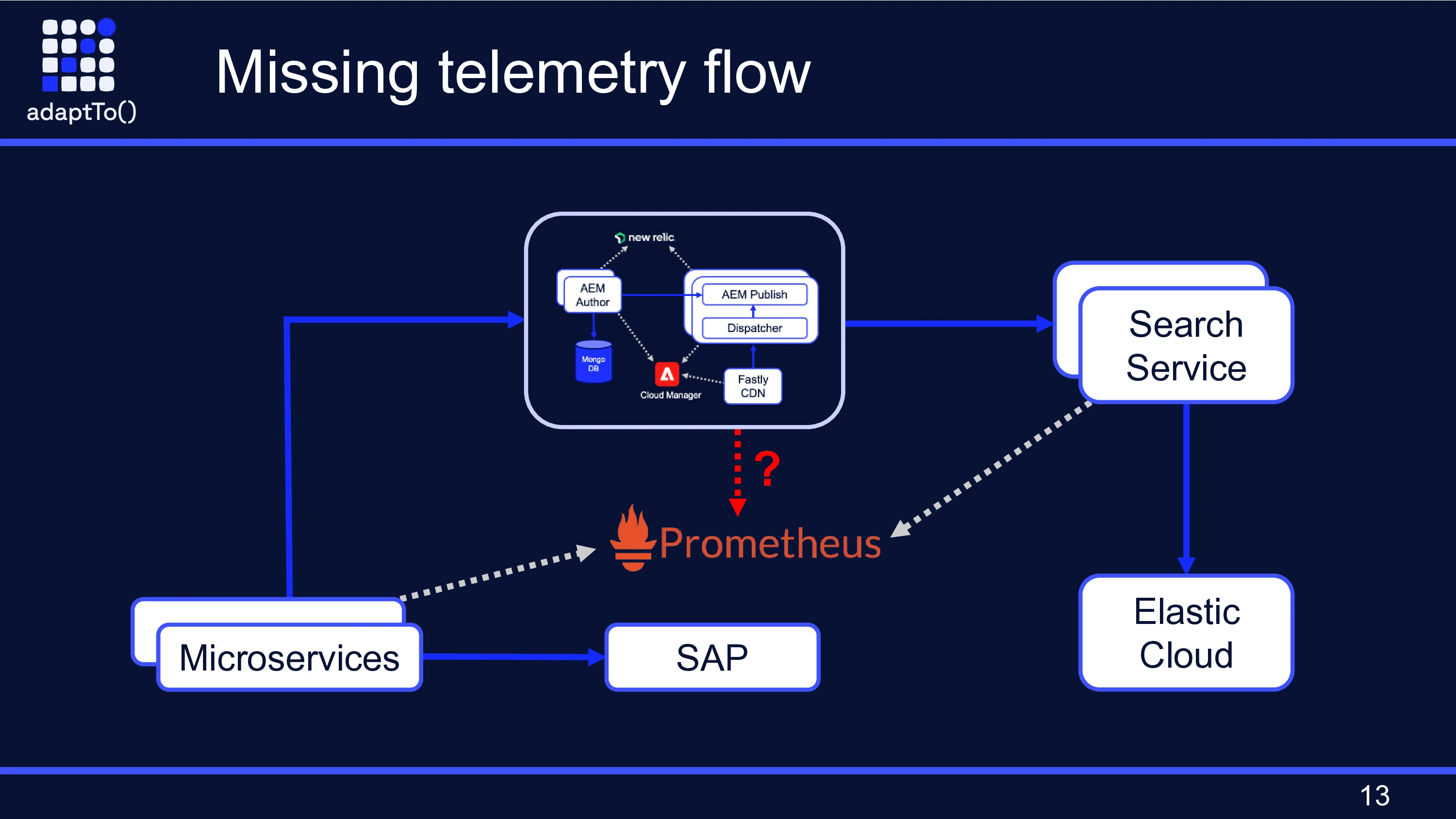

The third-day Roy Teeuwan and Barry d'Hoine presentation highlighted the importance of observability in cloud-based AEM deployments, especially when dealing with AEM as a Cloud Service.

As Michał noted later - traditional monitoring techniques that rely on direct server access are no longer viable in a cloud environment: "20 years ago we used to rely on application servers for monolithic applications running on a single machine. AEM is some step forward because you got publishers that you can distribute the content to multiple machines, but still at the core it's a monolithic application that can break."

The presentation on OpenTelemetry showcased its ability to overcome the limitations of traditional monitoring tools when applied to AEM as a Cloud Service. The delayed and aggregated nature of logs and metrics provided by services like Splunk and New Relic makes real-time troubleshooting a challenge.

In contrast, OpenTelemetry enables developers to gain granular, real-time insights into system behavior and collect telemetric data including metrics, traces, and logs. This data can then be exported to a visualization platform like DataDog, providing a comprehensive view of system performance and enabling developers to pinpoint issues with precision.

StreamX utilizes OpenTelemetry for its own observability, so as Tad Reeves put it: "you can leverage OpenTelemetry to trace requests across AEM and StreamX."

Integrating StreamX into an AEM architecture further enhances the overall monitoring capabilities of the system. Developers can leverage OpenTelemetry to:

Understand how data flows between the two systems, identifying potential bottlenecks or performance issues

Gain visibility into StreamX pipelines and monitor the performance of StreamX data pipelines, ensuring data is processed and delivered efficiently

Perhaps the most exciting takeaway from adaptTo() was the viability of hybrid solutions, embracing the "right tool for the job" philosophy at a project level.

Edge Delivery Services were a major focus at the conference, praised for their potential to significantly enhance performance and scalability, especially for static content.

During the panel discussion, we learned that EDS is based on a very opinionated approach that ruthlessly prioritizes page performance. EDS also leverages the power of familiarity that content authors have with document editors like Google Docs or Word, which is very much appreciated when content editors are invited into the committee making a decision on a CMS solution.

However, as Marta Cukierman pointed out in the podcast, the discussions also highlighted that edge delivery services aren't a one-size-fits-all solution.

In Mariia Lukianet's presentation, Adobe itself showcased this hybrid approach with its redesigned adobe.com, using EDS for content-heavy sections prioritizing speed while leveraging traditional AEM for areas requiring complex authoring workflows and fine-grained permissions management.

This real-world example demonstrated how combining different tools can effectively address the complex needs of a modern website. This is also how StreamX could enhance and complement Edge Delivery Services within the AEM ecosystem, acting as an intermediary layer, bridging the gap between source systems like AEM, PIM, and external APIs with the Edge Delivery layer, ensuring data consistency and performance across the entire architecture.

The resounding message from adaptTo() is that the AEM world is ripe with opportunities for innovation. By embracing the "right tool for the job" philosophy, developers can build digital experiences that are not only high-performing and scalable but also tailored to the unique needs of each project.